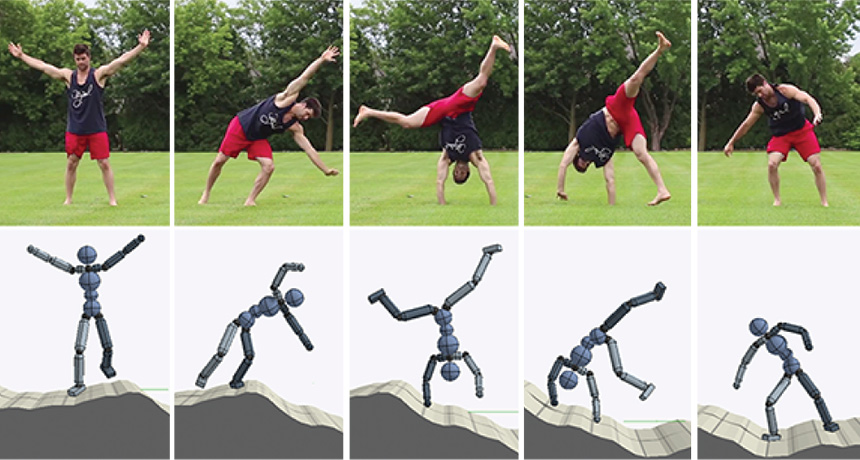

Virtual avatars learned cartwheels and other stunts from videos of people

Animated characters can learn from online tutorials, too.

A new computer program teaches virtual avatars new skills, such as dances, acrobatic stunts and martial art moves, from YouTube videos. This kind of system, described in the November ACM Transactions on Graphics, could render more physically coordinated characters for movies and video games, or serve as a virtual training ground for robots.

“I was really impressed” by the program, says Daniel Holden, a machine-learning researcher at Ubisoft La Forge in Montreal not involved in the work. Rendering accurate, natural-looking movements based on everyday video clips “has always been a goal for researchers in this field.”

Animated characters typically have learned full-body motions by studying motion capture data, collected by a camera that tracks special markers attached to actors’ bodies. But this technique requires special equipment and often works only indoors.

The new program leverages a type of computer code known as an artificial neural network, which roughly mimics how the human brain processes information. Trained on about 100,000 images of people in various poses, the program first estimates an actor’s pose in each frame of a video clip. Then, it teaches a virtual avatar to re-create the actor’s motion using reinforcement learning, giving the character a virtual “reward” when it matches the video actor’s pose in a frame.

Computer scientist Jason Peng and colleagues at the University of California, Berkeley, fed YouTube videos into the system to teach characters to do somersaults, backflips, vaulting and other stunts.

Even characters such as animated Atlas robots with bodies drastically different from those of their human video teachers mastered these motions (SN: 12/13/14, p. 16). Characters could also perform under conditions not seen in the training video, like cartwheeling while being pelted with blocks or moving across terrain riddled with holes.

The work, also reported October 8 at arXiv.org, is a step “toward making motion capture easier, cheaper and more accessible,” Holden says. Videos could be used to render virtual versions of outdoor activities, since motion capture is difficult to do outdoors, or to create lifelike avatars of large animals that would be difficult to stick with motion capture markers.

This kind of program may also someday be used to teach robots new skills, Peng says. An animated version of a robot could master skills in a virtual environment before that learned computer code powered a machine in the physical world.

These animated characters still struggle with nimble dance steps, such as the “Gangnam Style” jig, and learn from short clips featuring only a single person. David Jacobs, a computer scientist at the University of Maryland in College Park not involved in the work, looks forward to future virtual avatars that can reenact longer, more complex actions, such as pairs of people dancing or soccer teams playing a game.

“That’s probably a much harder problem, because [each] person’s not as clearly visible, but it would be really cool,” Jacobs says. “This is only the beginning.”